This learning piece provides a step-by-step guide for developing your 3D Object Recognition application, from data collection to deployment. Learn about the different approaches to data collection and preparation, the importance of feature engineering, and the process of selecting and training a machine learning model. Discover best practices for evaluating and deploying your model’s performance for real-world use.

In Brief: My 6-Step Process to Build a 3D Object Recognition Apps

From my late office night, here is my build process to create such a 3D Recognition Solution.

Your 3D object recognition build process should be approached in a modular and iterative manner. This is the most crucial takeaway about developing a 3D object recognition application.

Why Wait?

You can start taking action and getting hands-on with the A to Z recipe for building 3D Apps.

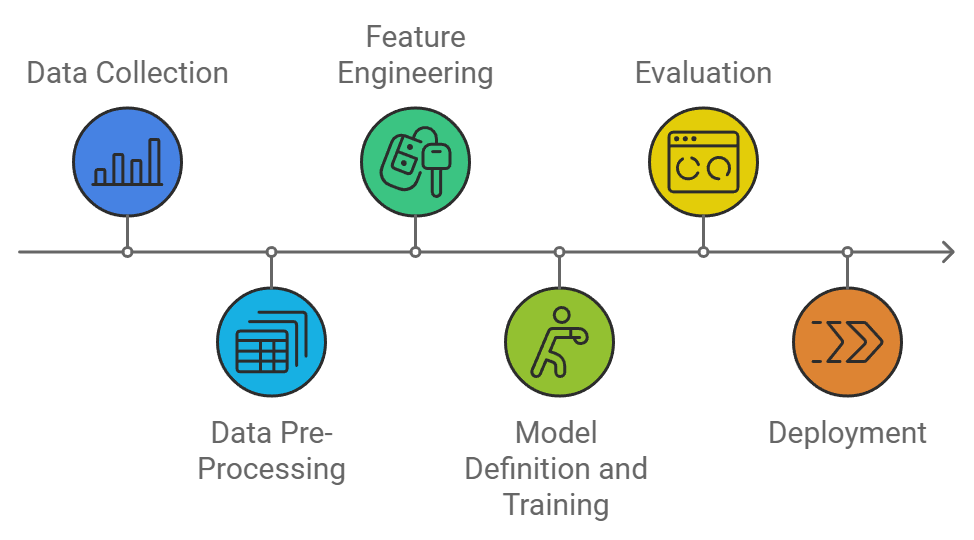

For illustration, here is the overall process:

Now, here are some structuring thoughts on the overall subject

Additional Resources on 3D Object Recognition

3D object detection has emerged as a pivotal task, enabling machines to perceive and understand the three-dimensional structure of the world around them. Unlike traditional 2D object detection, which identifies objects within images or videos, 3D object detection aims to locate and classify objects in a 3D space, providing information about their position, orientation, and dimensions.

Critical Challenges in 3D Object Detection

While 3D object detection has made significant strides, several challenges remain:

- Data Scarcity: Obtaining large-scale, high-quality 3D datasets is often difficult due to the cost and complexity of data acquisition methods.

- Occlusion and Clutter: Objects in real-world scenes can be occluded by others or surrounded by clutter, making it challenging for algorithms to accurately detect and localize them.

- Computational Cost: 3D object detection algorithms can be computationally expensive, requiring powerful hardware to achieve real-time performance.

Techniques for 3D Object Detection

Several techniques have been developed for 3D object detection, including:

- Lidar-Based Methods: Lidar (Light Detection and Ranging) sensors emit laser beams to measure the distance to objects, providing accurate 3D point clouds. These point clouds can be processed using various algorithms to detect objects.

- Camera-Based Methods: 3D object detection can also be achieved using cameras by leveraging techniques such as stereo vision, monocular depth estimation, and deep learning models.

- Fusion Approaches: Combining information from multiple sensors, such as Lidar and cameras, can improve the accuracy and robustness of 3D object detection.

My Recommendations for 3D Object Recognition

In addition to modularity, high-quality data, and proper preparation are essential for successful 3D Object Recognition Solutions. A comprehensive evaluation strategy is critical in understanding the model’s performance. I also suggest starting with simpler solutions and gradually increasing complexity in model selection and deployment strategy. I cannot stress enough the importance of cleaning and preprocessing data to remove noise and ensure it is formatted correctly for the chosen model.

Finally, there is a significant need to highlight the need for a thorough evaluation using appropriate metrics and benchmarking to compare different models and choose the best solution.

The 6-Step Workflow (3D Object Recognition)

Here is the 6-stage workflow that I propose for 3D Object Recognition:

1. Data Collection

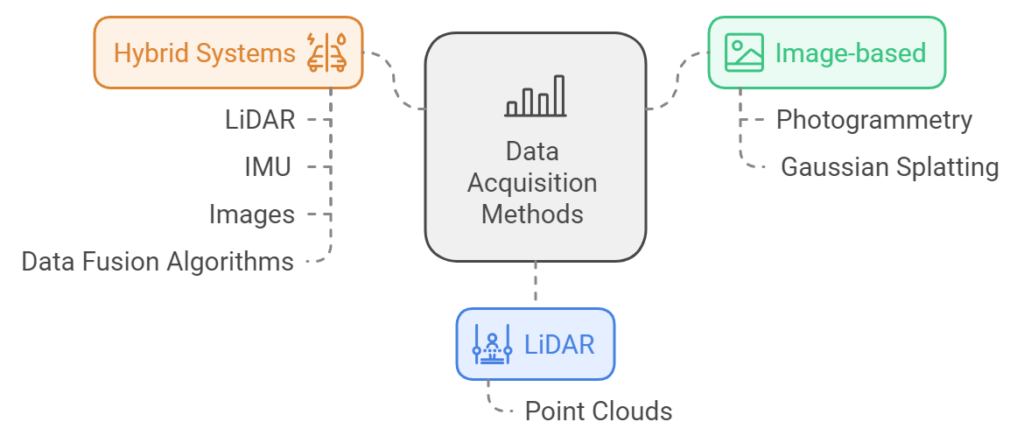

- Determine the appropriate data acquisition method: LiDAR, image-based (Photogrammetry, Gaussian Splatting), or hybrid (LiDAR, IMU, images).

- Understand the data format for each method: point clouds for LiDAR, images for image-based, and a combination for hybrid systems.

- Consider data fusion algorithms for hybrid systems.

2. Data Pre-processing

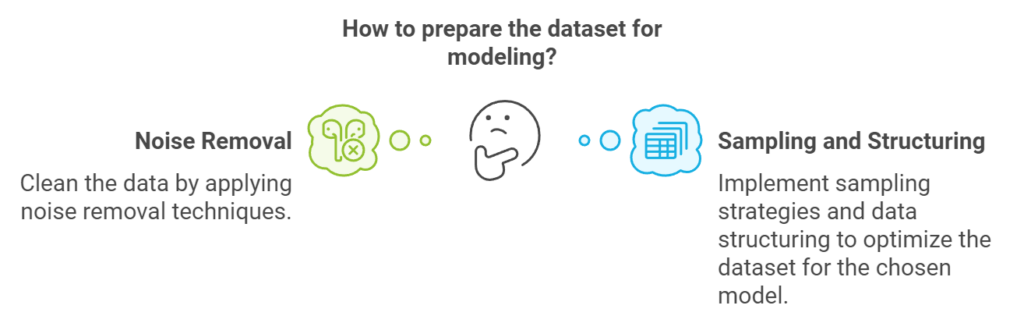

- Clean the data by applying noise removal techniques.

- Implement sampling strategies and data structuring to optimize the dataset for the chosen model.

3. Feature Engineering

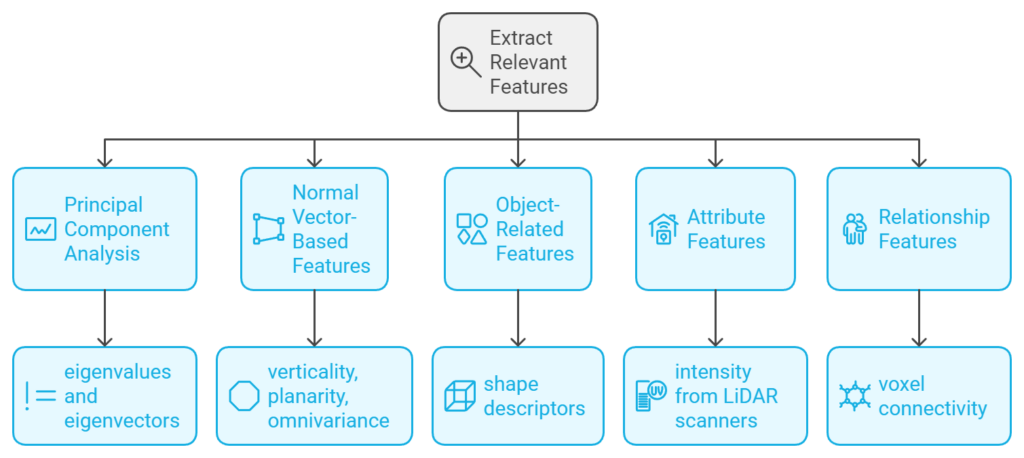

- Extract relevant features for 3D object detection using techniques like:

- Principal component analysis (eigenvalues and eigenvectors)

- Normal vector-based features (verticality, planarity, omnivariance)

- Object-related features (shape descriptors)

- Attribute features (intensity from LiDAR scanners)

- Relationship features (connectivity between objects).

4. Model Definition and Training

- Model Selection:Adopt a “lean” approach, starting with simpler, less computationally intensive models before considering complex AI models.

- Suggested models include Support Vector Machines (SVM), boosting algorithms (trees, random forest), and k-nearest neighbors.

- Deep learning models are suitable but require careful consideration of data modality and computational resources.

- Data Preparation: This is a crucial step in formatting the data for the chosen model, considering factors like point cloud downsampling, tiling, and voxel size.

- Training:Begin with random weight initialization (for deep learning) or standard hyperparameters.

- Implement AutoML practices to adjust hyperparameters within the training loop automatically.

- Data Splitting:Divide the dataset into training, testing, and validation sets.

- Testing assesses model performance, validation represents real-world scenarios.

5. Evaluation

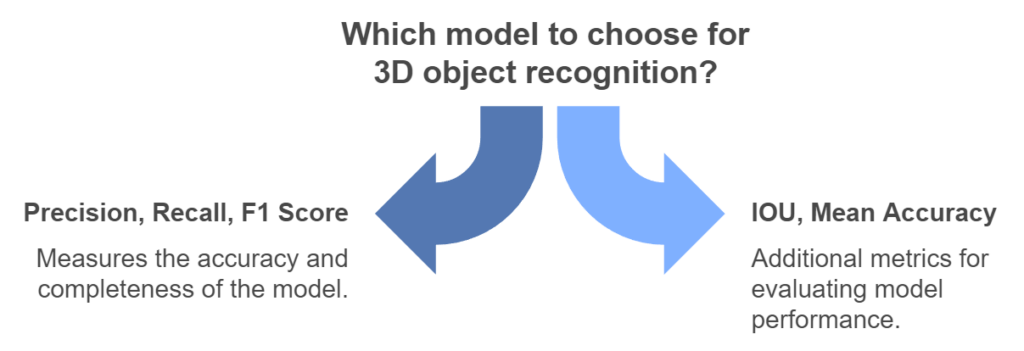

- Define relevant metrics for evaluating model performance, focusing on precision, recall, and F1 score.

- Consider IOU and mean accuracy as additional metrics.

- Benchmark different models to compare their performance and choose the best option.

- “Whenever we’re in the 3D space, especially for a 3D object recognition, you really need to know how precise your model is and how exhaustive it is. So there is this measure of precision and recall that are super important and F1 score that combines both of them.”

6. Deployment

- Focus on optimization, automation, and shipping the application.

- Emphasize a manual approach before automating processes to avoid potential issues.

“If you automate too early on, that’s the recipe for disaster. So automate when everything is working at a manual aspect first, but yeah, everything should be working before you automate.”

This pipeline offers a structured approach to developing a 3D object recognition app. By carefully considering each stage and following the recommended best practices, developers can create robust and effective applications.

Conclusion

The process begins with data collection, emphasizing light detection and ranging (LiDAR), image-based approaches, or a hybrid combination. The second stage involves data pre-processing, which includes cleaning the data, removing noise, and applying sampling strategies. Next, feature engineering focuses on extracting key features for object detection, such as principal component analysis, normal vectors, and object-related attributes. The fourth stage, model definition and training, involves selecting a suitable model, such as support vector machines (SVMs) or deep learning models, preparing data for training, and tuning hyperparameters. The fifth stage, evaluation, emphasizes evaluating the model’s precision, recall, and exhaustiveness, often using benchmark models to compare performance. Finally, deployment involves optimizing the system and automating the process for real-world applications.

My 3D Recommendation 🍉

Why Wait?

You can start taking action and getting hands-on with the A to Z recipe for building 3D Apps.

Other 3D Tutorials

- 3D Pipeline Architecture: A Founder’s Blueprint

- Multi-View Engine for 3D Generative AI Models (Python Tutorial)

- 3D Scene Graphs for Spatial AI with NetworkX and OpenUSD

- 3D Reconstruction Pipeline: Photo to Model Workflow Guide

- Synthetic Point Cloud Generation of Rooms: Complete 3D Python Tutorial

- 3D Generative AI: 11 Tools (Cloud) for 3D Model Generation

- 3D Gaussian Splatting: Hands-on Course for Beginners

- Building a 3D Object Recognition Algorithm: A Step-by-Step Guide

- 3D Sensors Guide: Active vs. Passive Capture

- 3D Mesh from Point Cloud: Python with Marching Cubes Tutorial

- How To Generate GIFs from 3D Data with Python

- 3D Reconstruction from a Single Image: Tutorial

- 3D Reconstruction Methods, Hardware and Tools for 3D Data Processing

- 3D Deep Learning Essentials: Ressources, Roadmaps and Systems

- Tutorial for 3D Semantic Segmentation with Superpoint Transformer

If you want to get a tad more toward application-first or depth-first approaches, I curated several learning tracks and courses on this website to help you along your journey. Feel free to follow the ones that best suit your needs, and do not hesitate to reach out directly to me if you have any questions or if I can advise you on your current challenges!

Open-Access Knowledge

- Medium Tutorials and Articles: Standalone Guides and Tutorials on 3D Data Processing (5′ to 45′)

- Research Papers and Articles: Research Papers published as part of my public R&D work.

- Email Course: Access a 7-day E-Mail Course to Start your 3D Journey

- Youtube Education: Not articles, not research papers, open videos to learn everything around 3D.

3D Online Courses

- 3D Python Crash Course (Complete Standalone). 3D Coding with Python.

- 3D Reconstruction Course (Complete Standalone): Open-Source 3D Reconstruction (incl. NERF and Gaussian Splatting).

- 3D Point Cloud Course (Complete Standalone): Pragmatic, Complete, and Commercial Point Cloud Workflows.

- 3D Object Detection Course (3D Python Recommended): Practical 3D Object Detection with 3D Python.

- 3D Deep Learning Course (3D Python Recommended): Most-Advanced Course for Top AI Engineers and Researchers

3D Learning Tracks

- 3D Segmentator OS: 3D Python Operating System to Create Low-Dependency 3D Segmentation Apps

- 3D Collector’s Pack: Complete Course Track from 3D Data Acquisition to Automated System Creation