Dive into the fascinating world of 3D with deep learning, where objects come alive and possibilities are beyond 2D pixels.

Few technologies can change the landscape of Computer Science at the pace and impact that Artificial Intelligence does. But one specific aspect is even more profound: 3D Deep Learning.

Why Learn 3D Deep Learning?

The impact of 3D Deep Learning is massive. From extracting information in MRI brain scans to automated 3D asset generation in games (including massive real-world datasets from 3D mapping and 3D reconstruction techniques), its outreach and potential are unrivaled. Especially to ensure self-driving cars are a reality.

But of course, I want to give you a clear roadmap that best fits your goals to develop 3D Deep Learning Systems along three tracks: The Hobbyist, the Engineer, and the Researcher / Innovator.

Let me demystify and provide something as close to a recipe as possible to unlock hands-on 3D Deep Learning Skills and become an “elite” 3D Innovator.

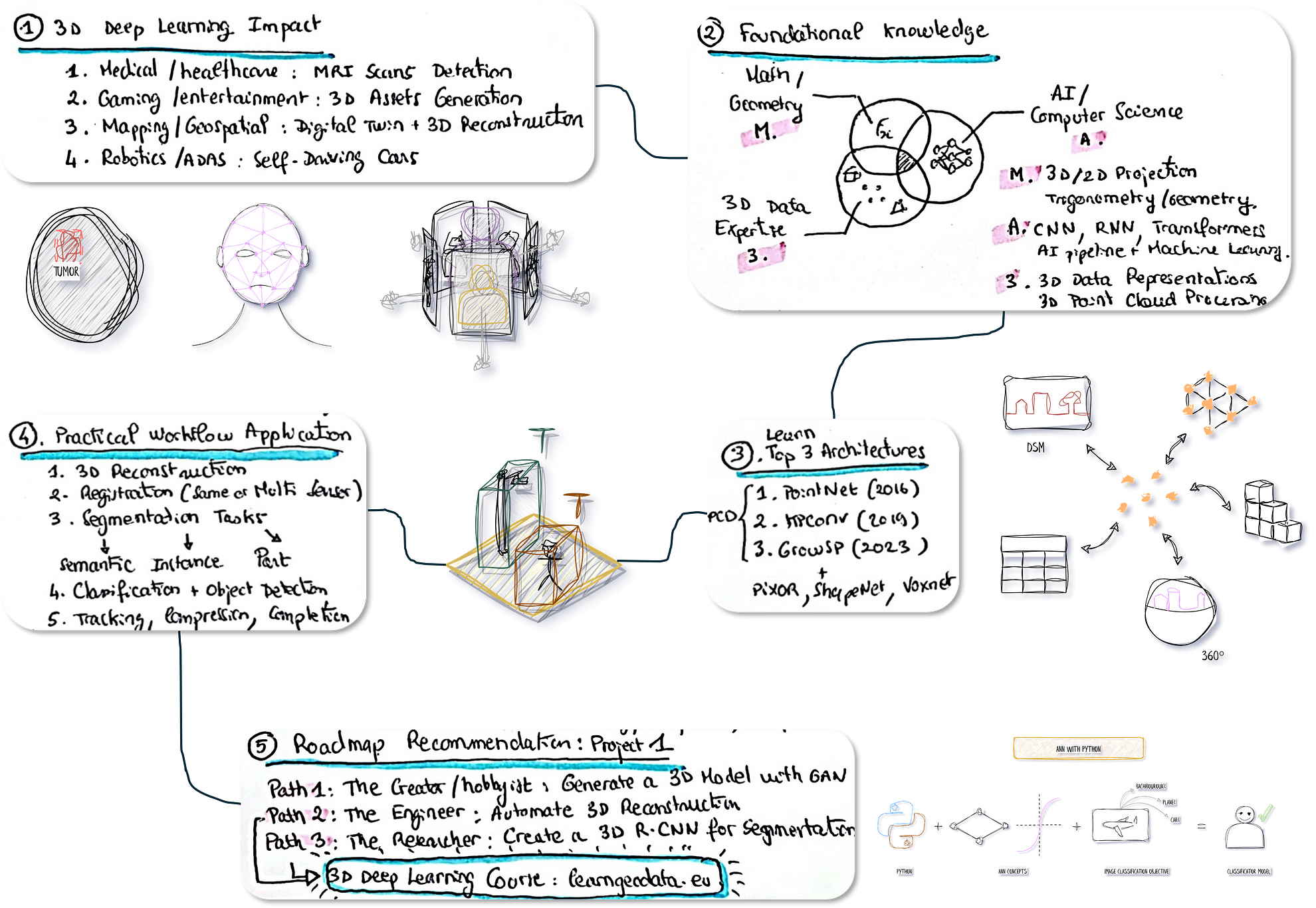

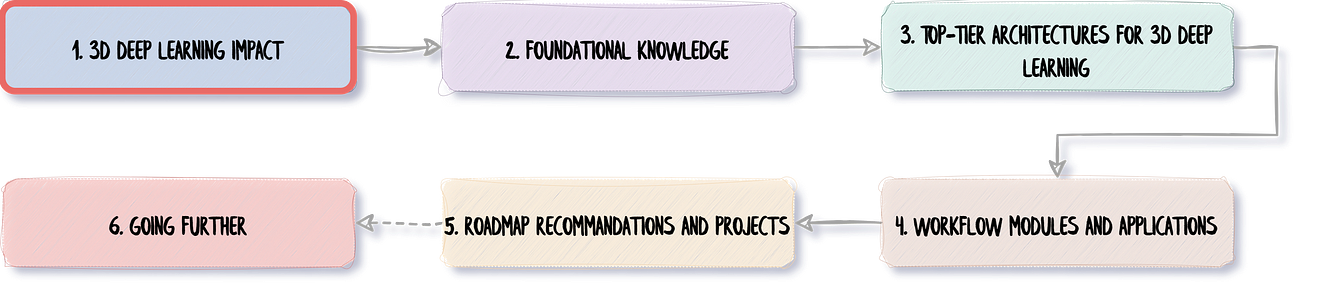

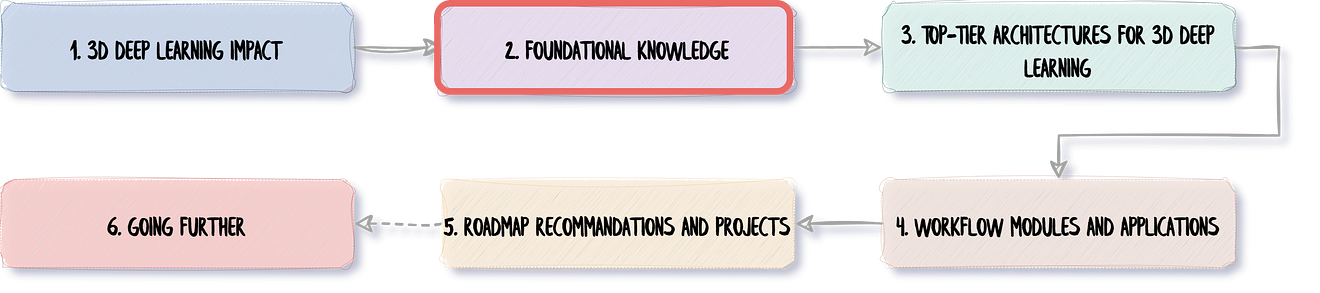

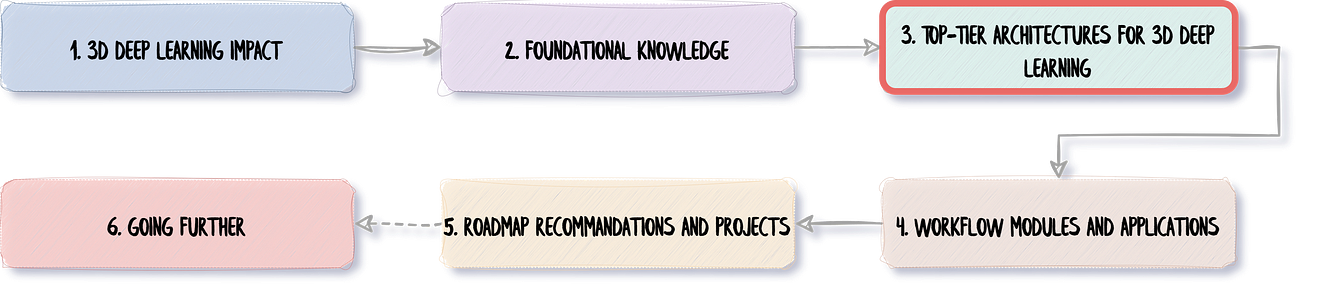

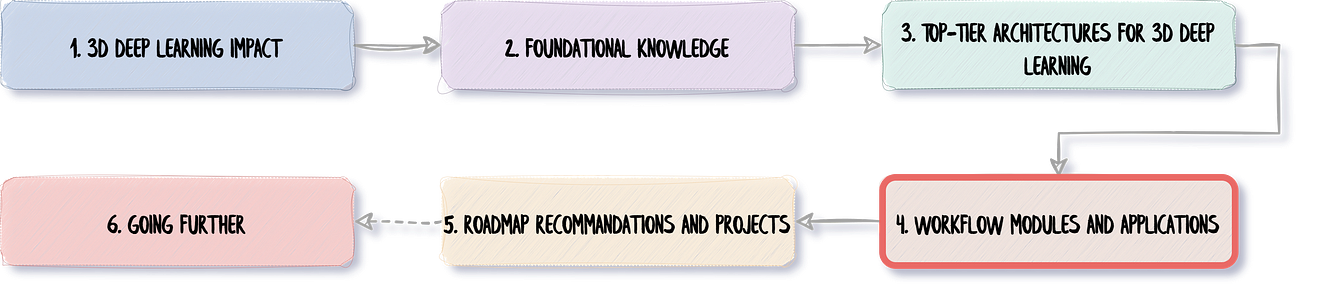

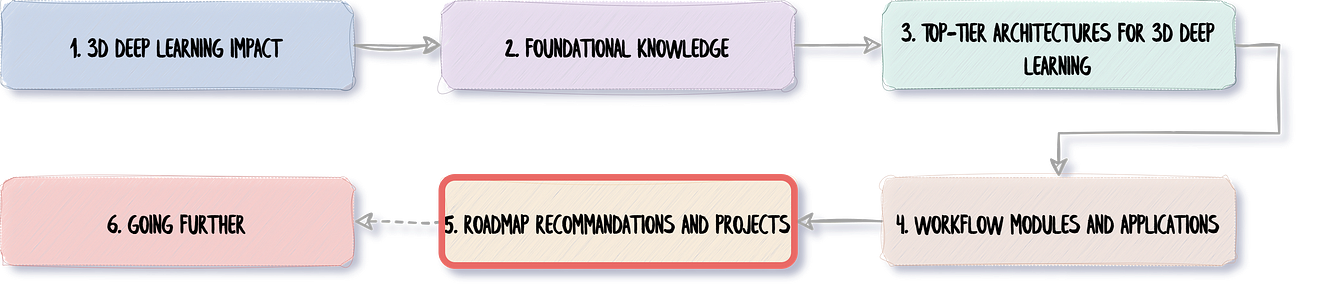

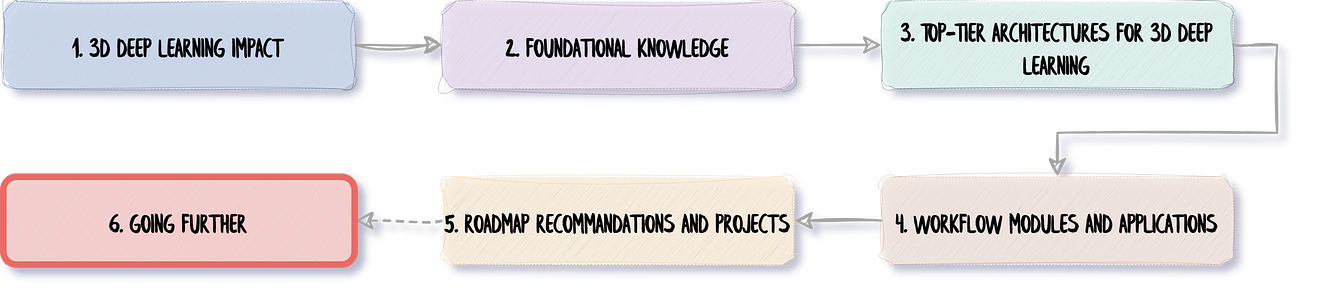

I approach the topic with five main modules, as illustrated below:

1. 3D Deep Learning Impact 2. Foundational Knowledge and Resources 3. Top-Tier Architectures to Master 4. Practical Workflow Applications 5. Roadmap Recommandations and Projects [BONUS] Going Beyond

Whenever you are ready, let us devour this 3D Deep Learning enchilada 🌶️

3D Deep Learning Impact

We have an opportunity 2024 to create a deep tech project with 3d deep learning. But it will be easier if we break down the process of learning 3d deep learning and how to get started and find the right pathway, the right track that best fits your world, close to you. The first stage is identifying why we should learn 3d deep learning. What is the impact of 3d deep learning? And here, I selected four main elements.

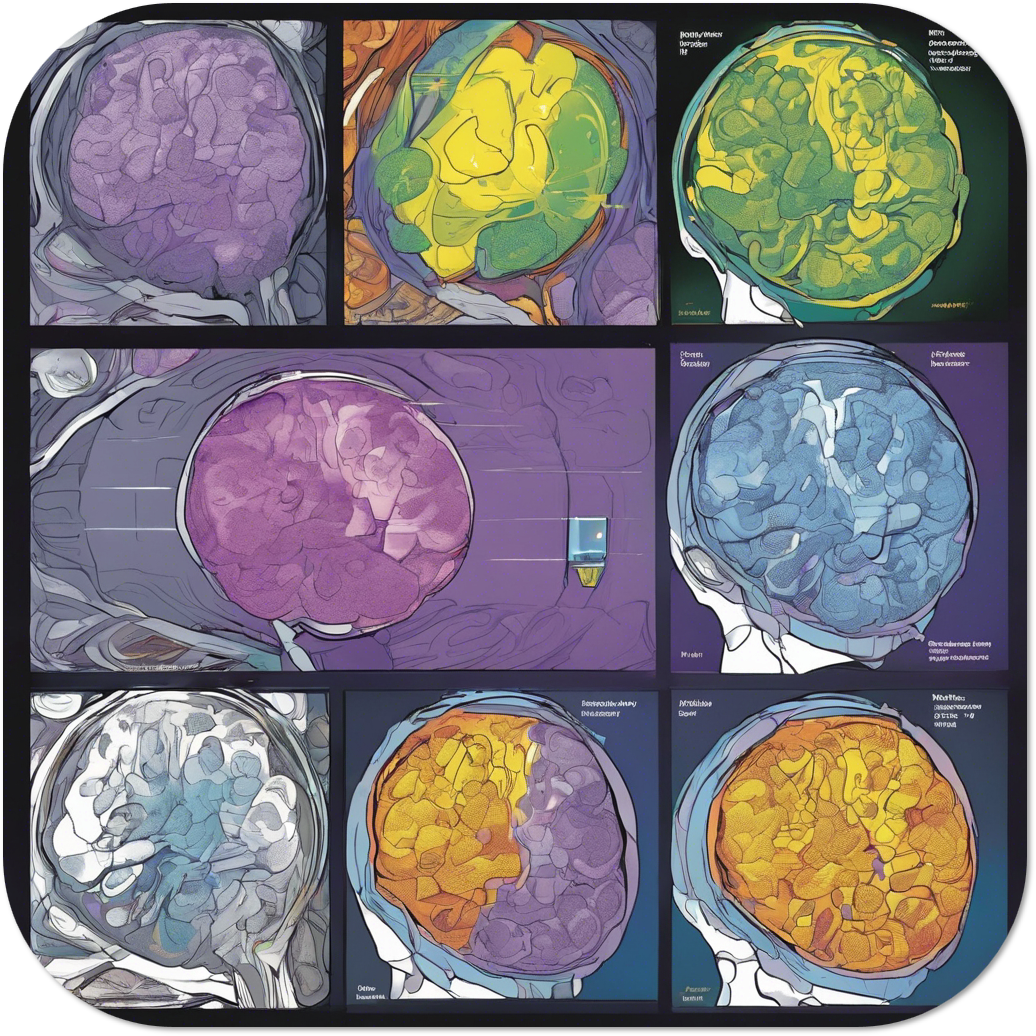

Medical and Healthcare Applications

The first one is in medical and healthcare. Whenever you go to the hospital for some reason, if you need to make a scan, there are specific scans called MRI, which would make slices of your brain slice your body, which is then processed with 3d workflows.

And having the ability to inject 3d deep learning there means that you may be able to develop some higher accuracy detecting algorithm that will spot, say, cancer early on or help like the doctor makes a better prediction about the health status or the health diagnosis of a patient, which is very significant.

Gaming and Entertainment Applications

Another trick, which will be the second element, will be, for example, if you want to speed up the gaming and entertainment industry, you can generate 3d assets that can be used in this environment very efficiently.

You can streamline that to have a complete end-to-end pipeline, which will take a text prompt, for example, as an input and spit out the 3D asset that you can inject directly inside your environment. If you’re working in the film industry, video game industry, or any industry that could relate to some entertainment, you have a lot of applications. For example, if you want to develop a new VR game, you have ways to generate synthetic 3D data generate procedural modeling. So, you have a lot of 3D applications that will be here, which will mostly be linked to 3D reconstruction. But of course, you can have some AI agents involved there, and then you will need to have some 3D analysis.

Mapping and Geospatial Applications

Another very cool thing is for mapping or geospatial professionals. With 3d deep learning, you can speed up various steps that lead to digital twin creation, for example, or 3d reconstruction at large.

There are also a lot of opportunities in this area. For example, identifying if the urban region around the specific area has too much buildings, How high are these buildings? How many pools do you have per square meter? You can do some stacks on 2D images only and 2D modalities. But the third dimension will give you much more robustness or extend the number of applications.

Robotics / Self-Driving Cars

Finally, one thing that I would also recommend checking out is if you are passionate about robotics or autonomous driving systems, definitely making that a reality will fall back to learning 3d deep learning at some point because there will be some specific elements that you need to adjust within this architecture that will leverage 3d deep learning.

For example, you can think about having a robot and wanting to develop something where you need to understand the environment and the surroundings, detect some specific object, and be able to interact with this object. For example, you could develop a robot that will automatically detect a coffee machine, detect when the coffee is ready, and then grab the coffee by the little handle and bring it to you at your desk so that you can stay seated without doing any exercise. How awesome would that be?

Jokes aside, that’s just one typical example. But of course, if you have a vacuum cleaner, you have many applications for that. Some can fly a sensor that generates a 3D point cloud map. So, there are a lot of applications in robotics. Here, I will add everything linked to perception and perceiving things with a physical robot.

That will be the first reason why it’s essential to learn 3D Deep Learning.

Now that you understand where you want to go or what you want to address first, it’s time to understand what foundational knowledge is needed.

Foundational Knowledge of 3D Deep Learning

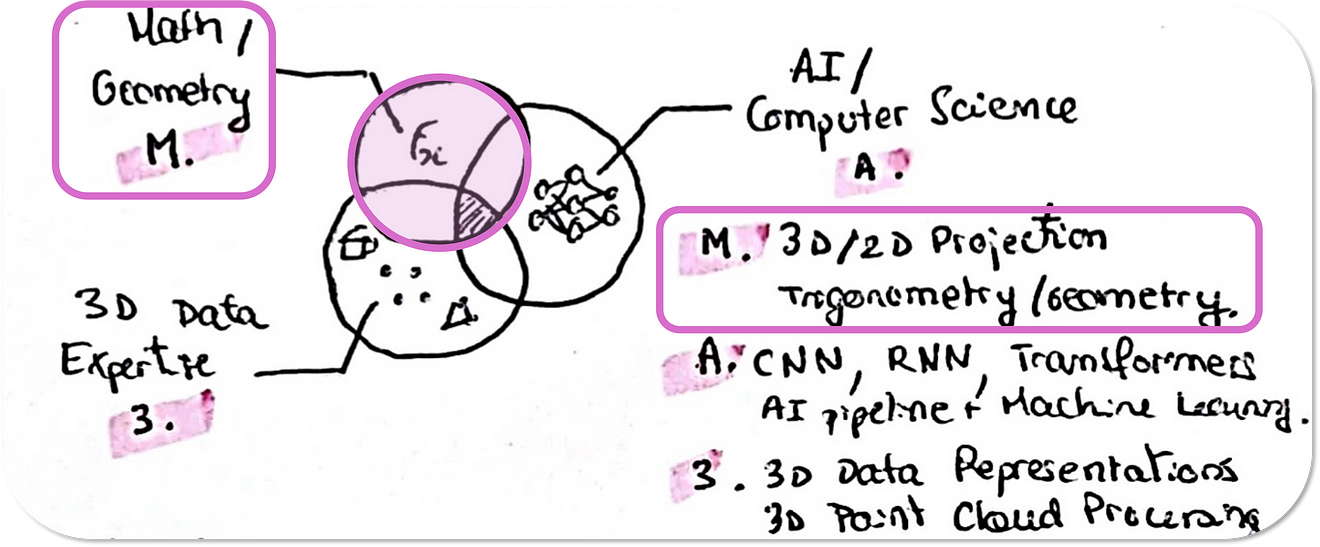

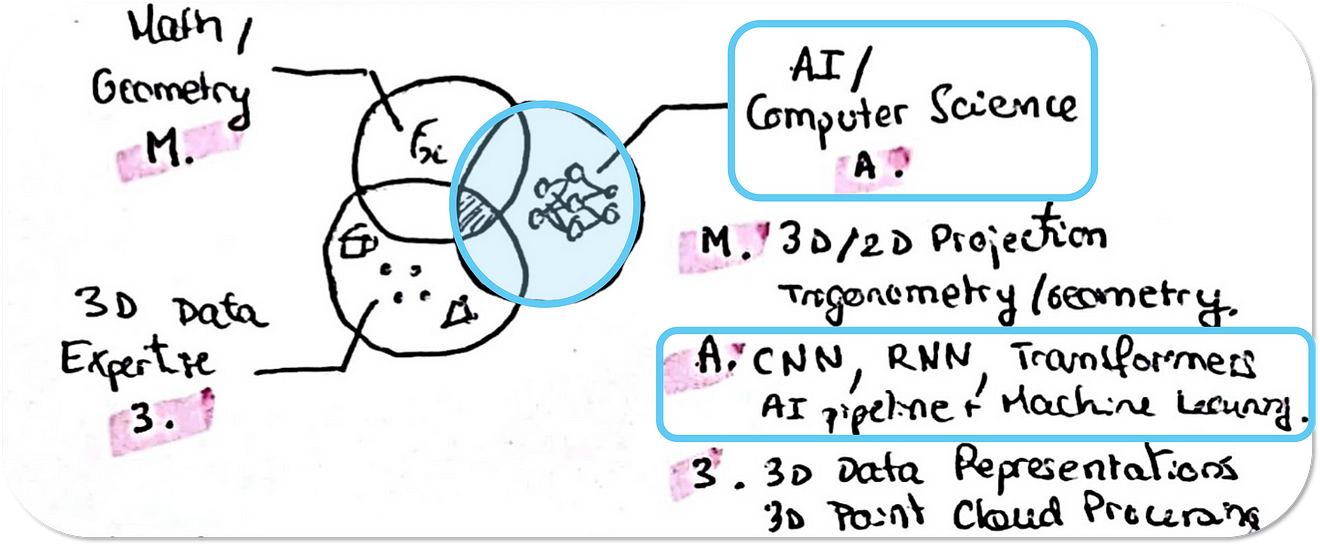

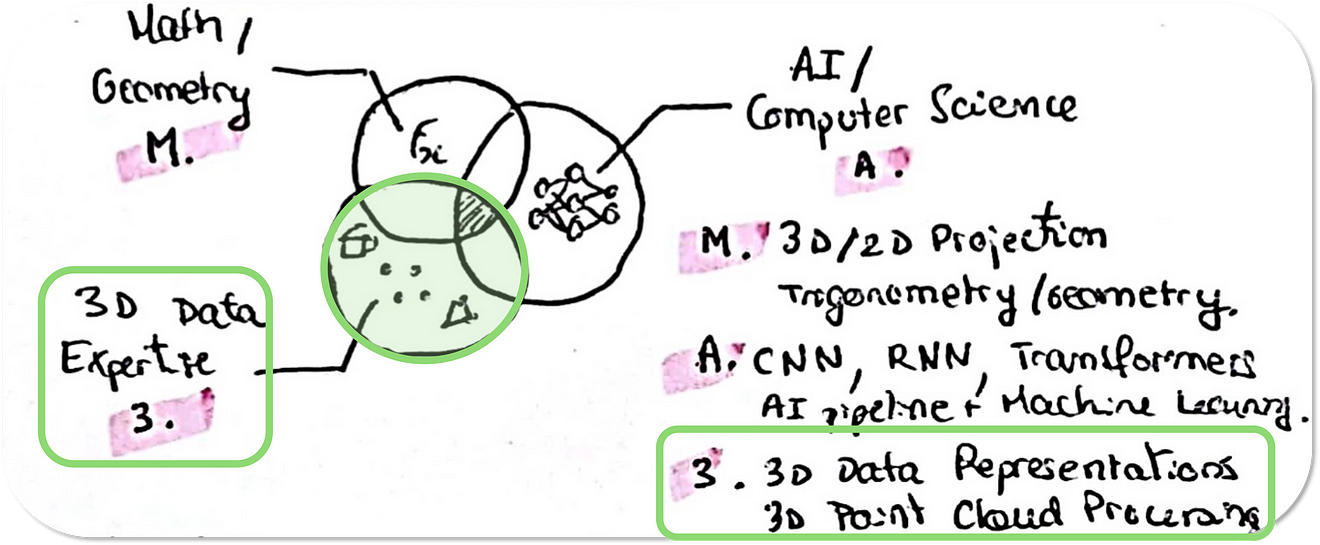

The good news is you can spend less than 10,000 hours. You can cut down the time to only the essentials to try early on your id, and the essentials here are on three main pillars: mathematics, artificial intelligence, and 3d data.

Mathematics Knowledge

You want to ensure you perfectly grasp geometry, 3d projections or reprojection, and trigonometry; that is enough at this stage.

Here are my selected top 3 resources on 3D Geometry and Projections:

- 🧑🏫 Open Online Course: Introduction to 3D Geometry.

This course, offered by edX, delves into the fundamentals of 3D geometry, covering topics like lines, planes, surfaces, and volumes. You’ll gain a foundational understanding of 3D space through real-world applications and problem-solving exercises. 👀 View Course - 📜 Online Article: 3D Geometry for Beginners.

This comprehensive article, published by Math is Fun, provides a clear and concise introduction to 3D geometry concepts. It covers points, lines, planes, and shapes in 3D space, along with helpful illustrations and visualizations. 👁️ Read Article - 📖 Book: Calculus: Early Transcendentals, by James Stewart.

While not solely focused on 3D geometry, this calculus textbook by James Stewart provides a solid foundation in the mathematical concepts underlying 3D space, including vectors, functions, and analytic geometry. This knowledge is essential for understanding and manipulating 3D objects and their projections. 🏦 Access Book

Then, we’ll move on to Artificial Intelligence. Woooou.

Artificial Intelligence / Computer Science Knowledge

At this stage, knowing what a neural network is, what a convolutional neural network is, and how an architecture is designed is good.

However, having an idea about transformers and the concept around it will help, but from the get-go, it’s unnecessary. Nevertheless, here is my selection of the top 3 resources to get you started on your journey into the exciting world of Artificial Intelligence (AI) and Data Science

3D Deep Learning and AI Resources

- 🧑🏫 Open Online Course: Crash Course in Artificial Intelligence.

This free course offered by Google AI on Coursera provides a broad overview of AI fundamentals, covering topics like machine learning, deep learning, natural language processing, and computer vision. The course is designed for beginners without prior knowledge of AI and uses engaging visuals and interactive quizzes to make learning fun and effective. 👀 View Course - 📜 Online Article: A Gentle Introduction to Machine Learning.

This article, published by Towards Data Science, offers a clear and concise explanation of the core concepts of machine learning, including algorithms, models, training data, and evaluation metrics. It’s an excellent resource for beginners who want to grasp how machines learn from data. 👁️ Read Article - 📖 Book: Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow (2nd Edition) by Aurélien Géron

This is one of my all-time favorite tech books. Aurélien Géron dives deeper into machine learning, teaching you how to implement various Machine Learning algorithms using popular Python libraries like Scikit-learn, Keras, and TensorFlow. While it requires some basic programming knowledge (Python), the book offers a hands-on approach to building machine-learning skills. 🏦 Access Book

🦚 Note: Remember, these are just a starting point, and many other excellent resources are available online and in libraries. As you delve deeper into AI and Data Science, you’ll discover more specific resources that cater to your interests and learning goals.

Towards a 3D Data Expertise

And then, finally, when we talk about 3d Data Expertise, two things will be perfect to have as a foundational layer in 3D data representation. How do you represent 3d data at large and cloud processing? Because this kind of data is canonical, you can attach other ways of representing data directly, which is phenomenal.

Here is my pick at the top 3 resources to help you get started:

3D Data Expertise Resources for 3D Deep Learning

- 🧑🏫 Open Online Course: Introduction to 3D Data Processing and Applications.

Offered by yours truly, this 7-day email course introduces you to the fundamentals of 3D data processing, a crucial aspect of 3D AI. You’ll learn about acquiring, storing, analyzing, and visualizing 3D data, equipping you with the skills to handle –wunder wunder– wonderful 3D applications. 👀 View Course - 📜 Online Article: 3D Data Processing: A Comprehensive Guide.

This article, which I again authored, thoroughly explores the 3D data processing pipeline, encompassing topics like data acquisition, pre-processing, filtering, segmentation, registration, and visualization. It offers valuable insights into the tools and techniques used for handling 3D data effectively. 👁️ Read Article - 📖 Book: 3D Object Reconstruction from Multiple Views: Theory and Algorithms by Richard Hartley and Andrew Zisserman.

This comprehensive book delves into the theoretical and algorithmic aspects of 3D object reconstruction from multiple views. It covers various techniques like camera calibration, feature matching, structure from motion, and multi-view stereo, providing a base understanding of the underlying concepts behind 3D reconstruction. 🏦 Access Book

🦚Note: Again, while these resources offer a strong foundation, the field of 3D data processing is vast and ever-evolving. But I make it my core mission to help on this track. As you progress, explore specific areas that pique your interest and delve deeper into more specialized resources and research papers.

Once you have that foundational block, you are ready to start on the third stage, which I will call learning top-tier architectures.

Top-tier Architectures for 3D Deep Learning

Implementing a 3D Deep Learning Solution is, in my fair opinion, one of the best ways to truly get into the game. But that usually requires willpower and time, with some coding knowledge (E.g., Python).

If you want to avoid getting on that path right away, grasping top-tier architecture and knowing how to read it is at least a great starting point. And here, I have selected three major ones for you.

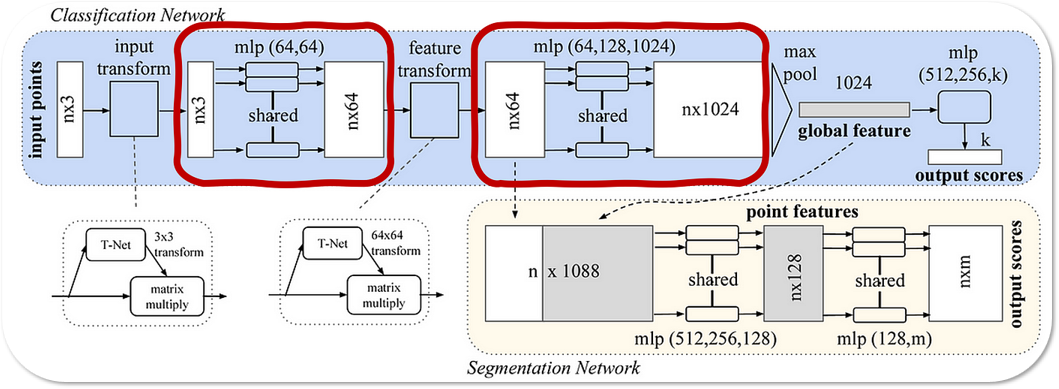

Point-based 3D Deep Learning for unstructured datasets: PointNet

The first one is Pointnet. Pointnet was among the first to process point clouds as unstructured data directly.

PointNet examines 3D data, such as point clouds comprised of object- or scene-representing points, in a savvy manner.

Unlike images or videos, point clouds lack a predefined layout or system. To solve this problem, PointNet examines each point individually.

The process involves the extraction of nuanced details, which is then integrated into a mechanism and leveraged to appreciate the general shape and characteristics of the collection of points.

As a result, PointNet serves the purpose of recognizing objects in a scene and extracting individual parts of the point cloud data.

📗Article Access: PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation

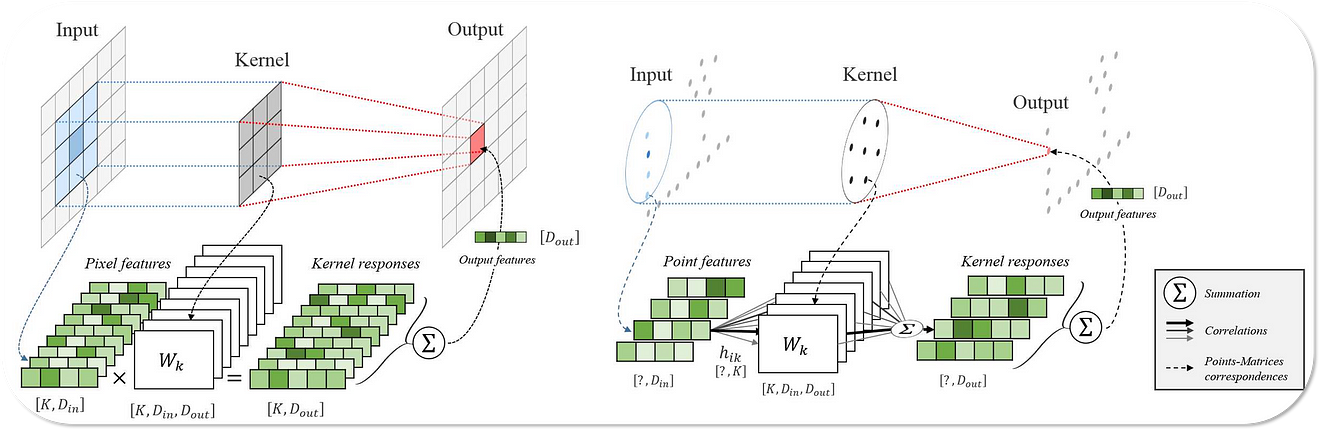

Moving to 3D Convolutions for 3D Deep Learning

The second one is KPConv, which is very interesting to understand how you can do convolutional with convolutional kernel on top of unstructured data — creating a top structured data approach on an unstructured data set.

Envision a typical filter in an image processing application, except that it comprises several freely arranged points in 3D space instead of gliding across a grid.

These “kernel points” are employed to inspect a point cloud, weighing the attributes of close-by points against their relationships with the kernel points themselves.

This is the core of the KPConv framework, which enables learning directly from random and scarce 3D data, like point clouds, without a rigid grid pattern.

As a result, it is precious for work like categorizing and sectioning objects in 3D, such as self-driving cars comprehending their environment.

📘Article Access: KPConv: Flexible and Deformable Convolution for Point Clouds

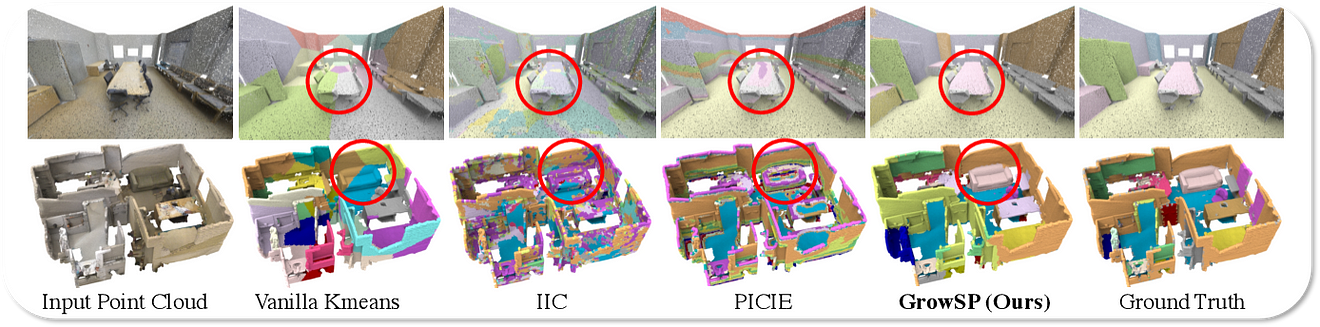

Unsupervised learning for 3D Point Clouds with 3D Deep Learning

The final one for point cloud is GrowSP, which is an unsupervised method, which I like mainly because it resonates with my thinking of having Gestalt’s Theory, which groups a set of elements and reasoning them from this group of visual cues instead of going down at the point level, right?

The GrowSP architectural framework is made up of three key components:

1. Feature harvester: This module picks up signals from individual points in the input point cloud to understand their characteristics.

2. Semi-automated superpoint buildout algorithm: This segmentary increases the populations of key points, into superpoints.

3. Clustered semantic scoring module: This piece groups super points into “recognizable” elements that can be used to generate a final prediction.

In short, the key to the GrowSP framework is a feature harvester that understands the individual characteristics of each key point in the point cloud. This is then used to build up superpoints by slowly grouping them based on those characteristics. Then, these superpoints are used to find the main components of the studied environment.

📙Article Access: GrowSP: Unsupervised Semantic Segmentation of 3D Point Clouds

Other Architectures worth mentioning

Then, you can extend that to Voxnet, Shapenet, or Pixor, for example, three other architectures showing you how you handle other modalities:

- 🧊 VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. VoxNet Access

- 🐒 ShapeNet: An Information-Rich 3D Model Repository. ShapeNet Access

- 🖼️ PIXOR: Real-time 3D Object Detection from Point Clouds. PIXOR Access

🦚 Note: I intentionally selected a tiny subset of 3D Deep Learning Architecture not to overflow your brain. Indeed, feeling swamped by the new architecture that arises daily is easy. Therefore, limiting these few architectures that permit a strong base for developing more robust approaches is best.

That will be my recommendation as far as architecture understanding goes. Then, we can move on to the fourth pillar: practical workflow modules.

Workflow Applications

It’s essential to understand the wide applications, and within these wide applications, what are the specific pinpoints in the workflow that deep learning or 3D deep learning can touch on?

The first one is 3D reconstruction.

3D Reconstruction

3d deep learning has its card to play in 3d reconstruction workflow. The most basic one is going from a 2d image to a point cloud, voxels, or 3D model.

And you will see some applications, for example, only extracting depth information from a single point of view using deep learning. Below is one very nice article, Depth Anything, with code on monocular image depth estimation.

💻 Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data

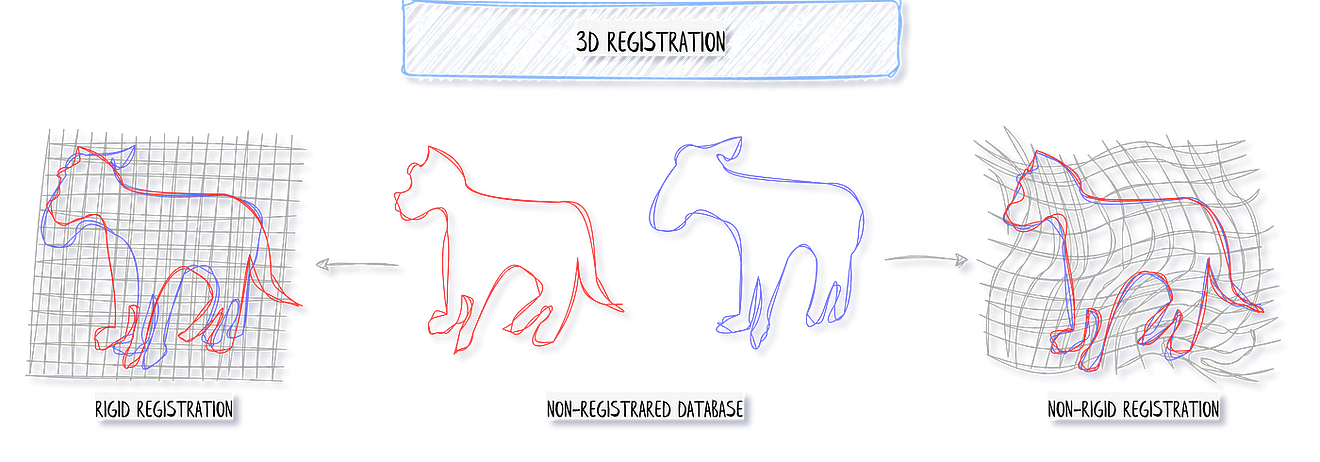

Registration + Sensor Fusion

In the second step, there will be registration. So, when registering, understand that you want to bring various perspectives into one specific frame of reference.

And here, you have two different approaches. The first one will have the same platform, correct, and the same sensor. The second approach will be multisensor registration, which is a bit more complicated. It is also where 3D deep learning will play a huge part, with some hints in the research article below.

📜 Research Article: A survey of LiDAR and camera fusion enhancement

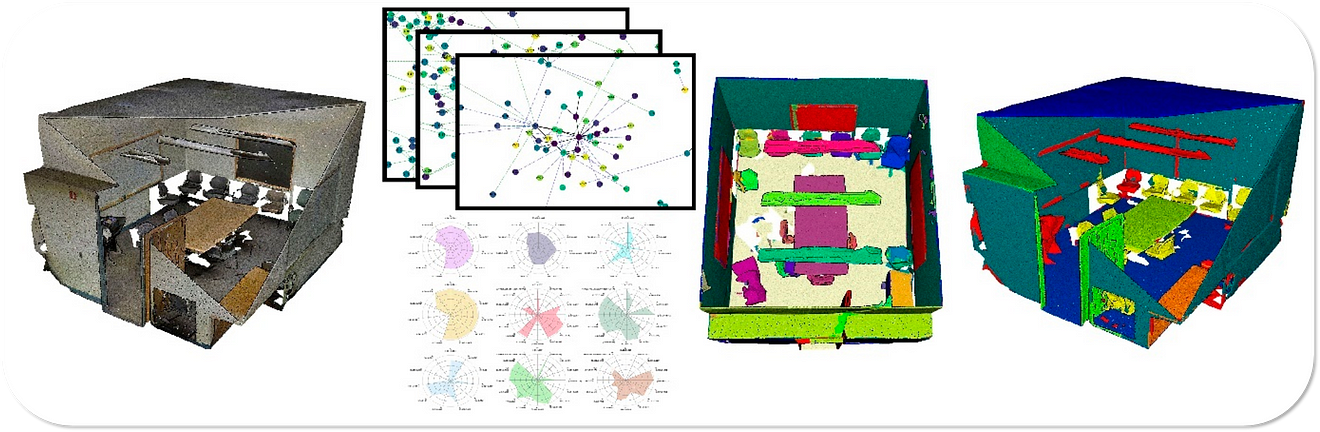

Segmentation and Clustering (Semantic Segmentation, Instance Segmentation, Part-Segmentation)

Then my favorite one is segmentation. Within segmentation modules, you want to detect groups of elements without or without explicit semantics.

We usually focus on segmentation with semantics, which play on three categories.

- Semantic segmentation. For each base entity in your dataset (a point in your point cloud, a face in your 3D mesh, a voxel unit in a voxelized dataset), you predict its label regarding a table of pre-designed labels.

- Instance segmentation. You add a label, precisely as semantic segmentation does, plus additional information about the instance number of the object per label you have. So this is the chair number one, this is the chair number two, and so on.

- Part Segmentation: This is used to refine an initial object into part constituents. Like the chair decomposed into the seat, back rest, and legs.

🦚 Note: When you do not care about explicit semantics, we refer to clustering or segmentation approaches. We are in an unsupervised fashion. We want to delineate groups of points or groups of elements in the scene that make sense together.

You can look at this article, which I wrote, decorated by the prestigious Jack Dangermond Award (best paper every 4 years):

📜 Research Article: Voxel-based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs Deep Learning Methods, by Florent Poux

Scene Understanding (Classification, 3D Object Detection)

After that, you have the fourth, let’s say, workflow module, which would be a classification or 3d object detection classification, in which you take on one whole object. You predict what it is, or 3d object detection, being able to extract 3d bounding boxes from your point cloud.

You have 3D object detection. So here, 3D object detection. The goal is to find the bounding box, usually of a 3D object. You have a 3D scene and want to see the 3D bounding box for a specific object.

📜 Research Article: Voxel-based 3D Point Cloud Semantic Segmentation: Unsupervised Geometric and Relationship Featuring vs Deep Learning Methods, by Florent Poux

Tracking, Compression, Completion

And then finally, I group three in one, right, which is tracking, compression, or completion, which will be very interesting for many applications as well.

For example, with body capture and things like this. You want to do some 3D correspondence in the entertainment industry to try to predict the motion of objects. Then, you have 3D shape modeling or 3D shape completion. You have a shape and want to complete it, or you want to create something out of nowhere. This is all very useful for making new data sets, creating new label data sets, spinning up a bit, and augmenting the data when we need to expand it.

🦚 Note: I know that’s a bit blurry at these steps. If you’ve never put your hands in machine learning or deep learning, all of this will be clarified afterward to ensure that we have a common language.

Finally, once all that is relatively clear, and you all have all the ins and outs, we can go on to my roadmap recommendation and choose where you fall onto three different paths.

Roadmap Recommendations for 3D Deep Learning

It is now time to choose your class. I made it pretty simple: three classes will unfold in front of your eyes. The goal is to select the one you most resonate with.

The Hobbyist

The first path, I call that the hobbyist. That’s where you do not want to dive deep inside the intricacies of deep 3D learning, but you are a creative mind wanting to leverage 3D tech to innovate the world. You want first to be a user, so you don’t necessarily need to code.

Let us detail the project to accomplish

🗺️Project: I call this project the 3D Deep Creation. You are going to find a a way to generate 3D models from scratch, using 3D Deep Learning (AI). The goal is to create 5 different 3D models representing an animal, a video game hero, a science fiction starship, and a ruin of a building. Now, it is your turn.

📦Resources: not to leave you out and dry, a starting point can be to investigate Point-E, or Luma Genie to complete this task. Happy Creating!

The Engineer

The second path is what I call the engineer. Someone who loves to see the full picture and automate workflows to get to the objectives with as minimal actions as possible.

🗺️Project: The goal is to create a system that works and solves problems. The engineering project is to automate 3D reconstruction. So you know that it works. How can I ensure that someone who drops something in will get the assets he wants? Right? So that’s automating this process. That will be the project for you.

📦Resources: You can design this with the same stack as the creator, extend it with NerF, or a Complete 3D Photogrammetry pipeline.

The Researcher / Innovateur

And the final, if you want to go really deep and be at the forefront of this technology and push the boundaries of what we make with it, it’s going on to the researcher and researcher paths.

🗺️Project: The project will create a 3D R-CNN for Semantic Segmentation tasks to detect all the roads and trees from a 3D point cloud. And that will also give you an excellent idea about whether it is something you like or are passionate about.

📦Resources: You can get your hands onto a 3D Point Cloud by exploring this Towards Data Science Article. And you can understand how to use PointNet reading and following the PointNet Tutorial. If you want to expedite the process, you have the 3D Deep Learning Course at your fingertips.

Going Further: 3D Deep Learning Advanced Topics

Once this is behind you, what is next?

Well, the potential to revolutionize 3D Scene Understanding is maximized by new 3D Deep Learning Applications that leverage Generative Adversarial Networks (GANs) and Large Language Models (LLMs).

Indeed, one of the most impactful capabilities of GANs is their ability to generate realistic 3D scenes from various inputs, including 2D images or text descriptions. By leveraging GANs, researchers can create synthetic training data invaluable in scenarios with limited real-world data.

By pairing this with LLMs’ exceptional ability to understand and generate human language, researchers can interpret user descriptions and translate these descriptions into actionable instructions for scene manipulation within GAN-generated environments.

This unique synergy opens doors for intuitive human-computer interaction, enabling users to describe and modify 3D scenes seamlessly and simply.

The potential for these technologies to breed exciting future applications is so big! 3D Deep Learning is incredible.

Outlook in the 3D Deep Learning Future

Once you master this, you are entitled to lay low for a couple of years. Buy a piece of land and grow some vegetables, create wine, and that’s your secret to unlocking freedom, right?

So joke away if you want to dive there deep. I have a course, which is the 3D deep learning course, where with Jean Jacques Ponciano, we spent three years of R&D Full-time. So, check it out if you want to speed up your growth.

🔗 Access the 3D Deep Learning Course

3D Deep Learning References

Other advanced segmentation methods for point clouds exist. It is a research field in which I am deeply involved, and you can already find some well-designed methodologies in the articles [1–6]. Some comprehensive tutorials are coming very soon for the more advanced 3D deep learning architectures!

- Poux, F., & Billen, R. (2019). Voxel-based 3D point cloud semantic segmentation: unsupervised geometric and relationship featuring vs. deep learning methods. ISPRS International Journal of Geo-Information. 8(5), 213; https://doi.org/10.3390/ijgi8050213 — Jack Dangermond Award (Link to press coverage)

- Poux, F., Neuville, R., Nys, G.-A., & Billen, R. (2018). 3D Point Cloud Semantic Modelling: Integrated Framework for Indoor Spaces and Furniture. Remote Sensing, 10(9), 1412. https://doi.org/10.3390/rs10091412

- Poux, F., Neuville, R., Van Wersch, L., Nys, G.-A., & Billen, R. (2017). 3D Point Clouds in Archaeology: Advances in Acquisition, Processing and Knowledge Integration Applied to Quasi-Planar Objects. Geosciences, 7(4), 96. https://doi.org/10.3390/GEOSCIENCES7040096

- Poux, F., Mattes, C., Kobbelt, L., 2020. Unsupervised segmentation of indoor 3D point cloud: application to object-based classification, ISPRS — International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences. pp. 111–118. https://doi:10.5194/isprs-archives-XLIV-4-W1-2020-111-2020

- Poux, F., Ponciano, J.J., 2020. Self-learning ontology for instance segmentation of 3d indoor point cloud, ISPRS — International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences. pp. 309–316. https://doi:10.5194/isprs-archives-XLIII-B2-2020-309-2020

- Bassier, M., Vergauwen, M., Poux, F., (2020). Point Cloud vs. Mesh Features for Building Interior Classification. Remote Sensing. 12, 2224. https://doi:10.3390/rs12142224