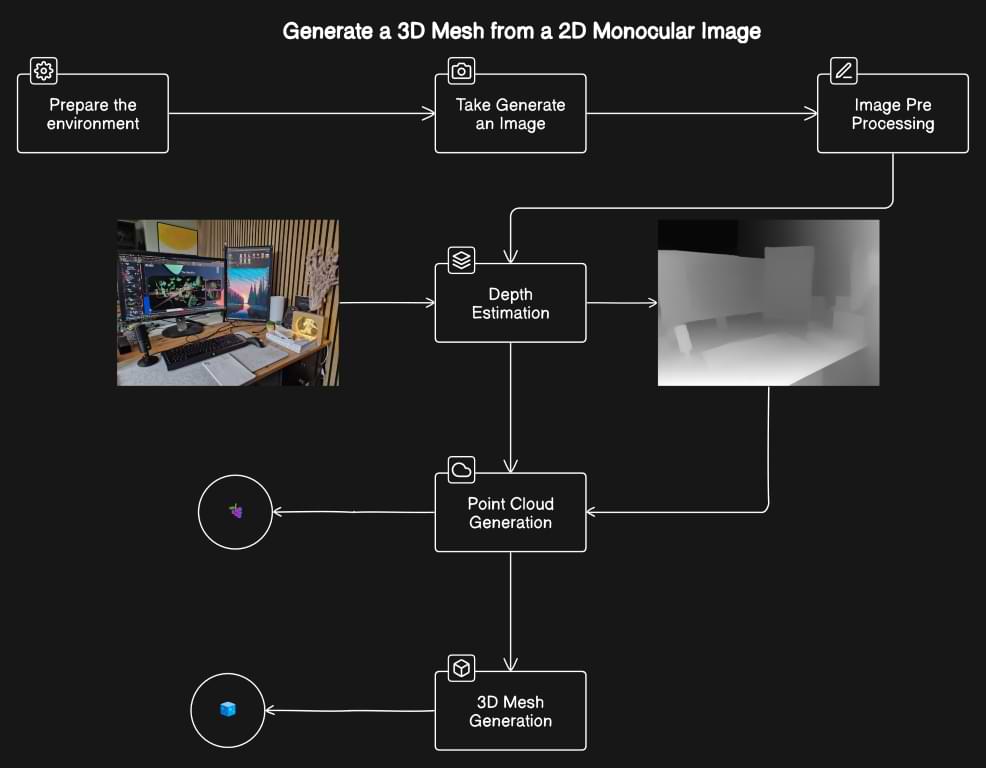

This tutorial targets Monocular Depth Estimation for 3D Reconstruction (Point Cloud, 3D Mesh). In the end, you will be able to generate 3D Models from any photo or AI-generated image.

3D Reconstruction with 3D Deep Learning

3D Reconstruction Combining the power of deep learning unlocks the ability to get 3D models from a single image. The advanced technology behind this process has made it incredibly easy for even the newest developers to create highly impressive models using just a few Python libraries and simple steps.

This tutorial is a step-by-step guide to using the power of deep learning to create 3D models from your own pictures using Depth Estimation Models.

3D Reconstruction: Environment Setup

The adventure starts by creating the right environment and a friction-free setup with Anaconda, critical Python modules, and your chosen Integrated Development Environment.

The installation of packages like PyTorch, Pillow, Matplotlib, Transformers, and Open3D lays the groundwork for the following stages of production. For each step in the journey, starting from image improvement to the construction of a point cloud and the eventual making of 3D models, the proper setup is super important.

Image Pre-Processing for 3D Reconstruction

Acquiring and preprocessing images are two indispensable stages in the process. It guarantees that the input materials are adequately equipped for the deep learning algorithms that will follow.

We can then improve and transform images into a nice format. This is crucial to successful neural network model performance by utilizing libraries such as Pillow for image processing. This advance paves the way for the most precise intensity estimation and cluster generation.

In addition, it lays the foundation for the more challenging stretches ahead and underscores the significance of meticulous data preparation for Depth Estimation.

Monocular Depth Estimation

Imagine transforming a single photograph into a three-dimensional model. That’s the goal of 3D reconstruction, a field that takes 2D images and breathes life into them by creating digital representations of real-world objects and scenes. This can be done with multiple cameras capturing the scene from different angles, but a particularly intriguing challenge is monocular depth estimation. Here, the aim is to achieve 3D reconstruction using a single image, mimicking how humans perceive depth despite having two eyes.

Monocular depth estimation tackles this challenge by leveraging sophisticated algorithms, often powered by deep learning. These algorithms analyze the image for subtle cues. These are shadows, textures, and object sizes to infer how far away different parts of the scene are from the camera. This depth information is then used to build a 3D model, opening doors for applications in robotics, autonomous vehicles, and even virtual reality.

In this tutorial, we use some to grasp the entire workflow.

From 2D to 3D Point Clouds with 3D Reconstruction

Now that we’ve covered image preprocessing let’s focus on point cloud generation to unlock a full 3D Reconstruction.

This is where we convert our 2D images into very detailed 3D models. Using Open3D software, we take the depth data from the previous step and use it to make a point cloud of the space those images show. This is a critical step because it takes us from traditional image processing into the more advanced world of 3D modeling and visualization.

3D Point Cloud to 3D Mesh

The surface reconstruction phase familiarizes us with the complex procedure of creating meshes using Poisson 3D Reconstruction algorithms.

While reconstructing the surface of 3D data point clouds, we obtain a rather detailed presentation of the scene in meshes full of color and unique textures. The whole process is an excellent example of the power of deep learning in transforming raw data into remarkable visuals using virtual models. It is of utmost importance to display the prospective application of this technology and how a myriad of businesses in virtual reality may profit.

As we journey through the intricacies of 3D mesh generation, we must address filtering out noise, estimating normals, and scraping out anomalies.

Each of these duties demands a delicate process and some technical know-how to see the mesh through to the end with accuracy, elegance, and grace.

We can heighten the quality of the mesh and make it mimic just about anything in real life if we keep tweaking numbers, noodling with algorithms, and walking the finer line between Enlightenment and Denial.

3D Reconstruction Quality Control

The last part of the procedure is exporting the grid and looking at the outcomes in 3D modeling apps. By using formats that everybody recognizes, such as OBJ or PLY, to export the grid, we may make a more thoughtful, finer, more honed 3D pattern in the committed software tools. This move marks the end of our journey through profound learning and 3D data operation, showing the change impacted by progressive equipment on modern data-theorizing knowledge.

Conclusion

To sum up, combining deep learning with 3D data processing fundamentally changes how we engage with and alter visual information.

Producing 3D datasets from single images showcases the impressive advancements enabled by up-to-date technologies that allow us to convert basic observations into captivating 3D replicas.

By following a sequential pattern and exploiting essential Python tools, experts in this area are totally able to expand the already provided options within the scope of data presentation, interpretation, and shaping. Thus, they are opening the doors not only for sophisticated use but also for a potentially thriving future relevant to a wide spectrum of activities.

My 3D Recommendation 🍉

Generating 3D Models from Single Images is fantastic. It opens up a lot of horizons… For Creativity purposes only (at this stage). If you are seeking a more metrically controlled approach, I highly recommend getting expertise with Photogrammetry to unlock Multi-View Reconstruction. It is more constraining than single images but provides a much better geometric base layer. If you want to use it in your solutions, I recommend checking out the 3D Collector’s Pack.

If you want to get a tad more toward application-first or depth-first approaches, I curated several learning tracks and courses on this website to help you along your journey. Feel free to follow the ones that best suit your needs, and do not hesitate to reach out directly to me if you have any questions or if I can advise you on your current challenges!

Open-Access Knowledge

- Medium Tutorials and Articles: Standalone Guides and Tutorials on 3D Data Processing (5′ to 45′)

- Research Papers and Articles: Research Papers published as part of my public R&D work.

- Email Course: Access a 7-day E-Mail Course to Start your 3D Journey

- Youtube Education: Not articles, not research papers, open videos to learn everything around 3D.

3D Online Courses

- 3D Python Crash Course (Complete Standalone). 3D Coding with Python.

- 3D Photogrammetry Course (Complete Standalone): Open-Source 3D Reconstruction.

- 3D Point Cloud Course (Complete Standalone): Pragmatic, Complete, and Commercial Point Cloud Workflows.

- 3D Object Detection Course (3D Python Recommended): Practical 3D Object Detection with 3D Python.

3D Learning Tracks

- 3D Segmentation Deck: From Classical 3D Segmentation to 3D Deep Learning and Unsupervised Applications

- 3D Collector’s Pack: Complete Course Track to address both 3D Application and Code Layers.