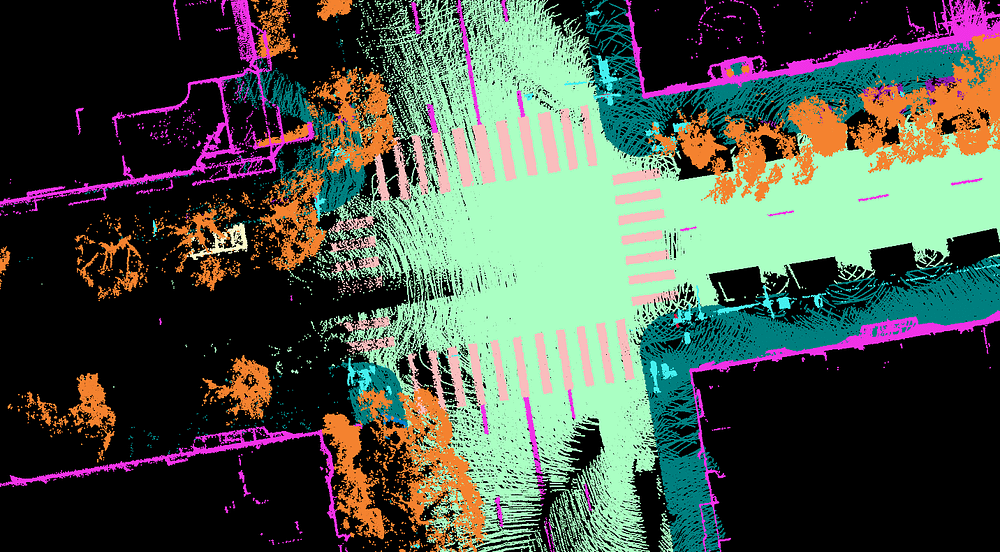

Scale AI released a new LiDAR point cloud dataset, and accelerate the growth of Autonomous Driving research.

Point Cloud Data labelling

Data labelling, also called data annotation/tagging/classification, is the process of tagging (i.e. labelling) datasets with labels. The quality of this process is essential for Supervised Machine Learning algorithms. They learn patterns from labelled data before trying to predict labels by identifying the same patterns in unlabeled datasets.

For self-driving car applications, we most often avoid explicitly programming machine learning algorithms on how to make decisions. Instead we feed deep learning (DL) models with labelled data to learn from. Indeed, DL models can get better with more data, seemingly without limit. However, to get a well-functioning model, it is not enough to just have large amounts of data, you also need high-quality data annotation.

LiDAR point cloud

With this in mind, Scale AI aims at delivering training data for AI applications such as self-driving cars, mapping, AR/VR, and robotics. Scale CEO and co-founder Alexandr Wang told TechCrunch in a recent interview: “Machine learning is definitely a garbage in, garbage out kind of framework — you really need high-quality data to be able to power these algorithms. It’s why we built Scale and it’s also why we’re using this data set today to help drive forward the industry with an open-source perspective.”

In collaboration with the lidar manufacturer Hesai, the company released a new dataset called PandaSet that can be used for training machine learning models, e.g. applied to autonomous driving challenges. The dataset is free and licensed for academic and commercial use and includes data collected using Hesai’s forward-facing (Solid-State) PandarGT LiDAR as well as a mechanical spinning LiDAR known as Pandar64.

The data was collected while driving urban areas in San Francisco and Silicon Valley before officials issued the stay-at-home COVID-19 orders in the area (according to the company).

The dataset features

- 48,000 camera images

- 16,000 LiDAR sweeps

- +100 scenes of 8s each

- 28 annotation classes

- 37 semantic segmentation labels

- Full sensor suite: 1x mechanical LiDAR, 1x solid-state LiDAR, 6x cameras, On-board GPS/IMU

It is freely downloadable at this link.

PandaSet includes 3D Bounding boxes for 28 object classes and a rich set of class attributes related to activity, visibility, location, pose. The dataset also includes Point Cloud Segmentation with 37 semantic labels. These include smoke, car exhaust, vegetation, and driveable surface within complex urban environments filled with cars, bikes, traffic lights, and pedestrians.

While other great Open-source autonomous vehicle dataset exist, this is a new effort to license datasets without any restrictions.

Open-source Self-driving cars datasets

I gathered below four other datasets that are of high quality and will certainly be useful for your Machine Learning / Self-Driving Cars projects. These can then be used with the 3D Geodata Academy, among the formations 3D Reconstructor, 3D segmentor and VR/AR Creator. You can start point cloud processing now with this.

- Waymo Open Dataset

- nuScenes by Aptiv

- Peking University/Baidu Apollo Scape

- University of California BDD100K

Originally published in Towards Data Science